How it works

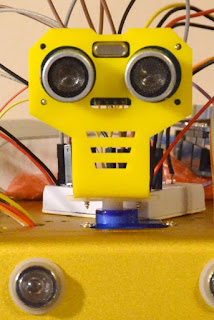

It is time to teach our robot navigating autonomously around the room. Some time ago we installed Ultrasonic Distance Sensor HC-SR04 with the mounting bracket. But up to this point, it was used just as a nice decoration.

A good explanation how the ultrasonic distance sensor works is provided on the Arduino web site: Ultra-Sonic "Ping" Sensor.

The idea is quite simple. The sensor emits out of its speaker an ultrasonic signal. If there is any obstacle in front of the sensor, this signal bounces back and is detected by the sensor microphone. Knowing the speed of the sound (approx. 340 m/s) and measuring the time from the signal emission till it bounces back, we can calculate the distance traveled by the signal.

Of course, physicists will be telling you that the speed of the sound is not stable and is affected by the air temperature. So you should also measure this temperature and use the following formula instead:

Speed of Sound (m/s) = 331,5 + (0,6 * temperature in °C)

Also they will tell that different surfaces reflect sound differently. So you can't rely on the ultrasonic sensor in the mission-critical tasks.

We are not creating a Mars rover or a self-driving car but still, bugs in the hardware or software can cause serious troubles. At least you can accidentally perform a "rapid unscheduled disassembly" of your robot. In the worst case, it can miss the stairs edge, fall and injure your or somebody else.

You must always keep in mind the Asimov's Three Laws of Robotics and this is very real and very serious:

- A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

- A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws.

There are also other reported difficulties of using the ultrasonic distance sensors:

- Conflicts. When two sensors are placed close to each other, a signal from one can be received by the other, which can lead to incorrect distance readings. You can avoid this issue if you query sensors one after another, avoiding their simultaneous work. But it will not help if your robot will meet the one alike. There are no fixes in the code which can prevent this devastating lovestruck.

- Small obstacles are missed. This is really annoying. Sensors can miss even as big obstacles as the table legs. This means, to navigate the encumbered spaces, you need to use something else.

- Annoy pets (cats and dogs). The explanation is that pets can hear the high-frequency ultrasonic sound and they don't like it. We carefully tested this on our own cat but found nothing except honest excitement and the interest to play with the new toy. So maybe this is not quite true.

Measuring the Distance

You don't need any special libraries to work with the sensor. Just place somewhere in your code a function like the following:// returns the distance to the obstacle in centimeters float getDistance() { digitalWrite(triggerPin, LOW); delayMicroseconds(2); digitalWrite(triggerPin, HIGH); delayMicroseconds(10); digitalWrite(triggerPin, LOW); long duration = pulseIn(echoPin, HIGH); return duration /29 / 2; // change this line if you prefer inches }

Looking around

In the similar projects, we borrowed an interesting idea of installing the sensor on top of the servo motor. This allows the robot to "look around". Not only you can take better decisions on how to avoid the obstacle - the robot with such feature looks much more charming and friendly.

The ultrasonic sensor together with the bracket weight not that much. That's why we selected one of the smallest servos: TowerPro SG90 Mini Gear Micro Servo 9g. It fits perfectly well to the porthole in the chassis. Looks like was placed intentionally for this kind of use.

The easiest way to control a servo in the firmware, is to use the Servo library. It is included into standard Arduino libraries.

#include "Servo.h" // connect the library ... Servo myservo; // create the servo variable ... myservo.attach(9); // use Arduino port #9 to control the servo myservo.write(90); // turn the servo to the position 90°

Please run this code before installing the bracket. It sets the servo to the middle point. Then you can install a bracket forward-facing.

A standard servo motor can turn in the range between 0° and 180°. If you use simple and inexpensive servos, it is better to avoid edge positions. It might happen that the servo gears were not adjusted precisely. Insisting on the edge position (0° or 180°) can damage the gears. So narrow the working range down at least to 10° - 170°.

At our robot, we use position 90° as a middle point and rotate the sensor left and right by 45°.

Please pay attention - mini servo gears are very delicate. Do not try changing their position manually even if the servo is turned off. If it is turned on - you will definitely destroy it, since it is intentionally designed to resist any forces which try to change the servo position.

Our servo was also damaged a bit at the very beginning by a bug in the robot's software. Instead of smooth and gentle head turns, we were sending a stream of the contradicting commands, turning the servo furiously in the opposite directions. No wonder - while we were trying to realize what's going on, the servo's gearbox exploded and stuck. Later we managed to assemble it back, and it even works. But the turning noise will never be the same again.

A very interesting explanation of the servo internals and build principles can be seen here: Electronic Basics #25: Servos and how to use them.

Wandering Around Autonomously

Algorithm

To try the ultrasonic sensor in the real-life environment we decided to implement a simple scenario of wandering around the room:

- The robot goes forward until the sensor detects some obstacle.

- If an obstacle is detected - robot stops and checks leftward and rightward if there are any obstacles there.

- The robot turns to the direction where more free space was detected. If there is no big difference between the leftward space and the rightward space - the turning direction is chosen randomly.

- Go to step 1.

With such firmware, the robot can wander around endlessly, avoiding collisions with the walls and other obstacles.

Working with the sensor

We created a class RobotDistanceSensor which wraps all the functions required to handle the ultrasonic sensor.

The distances (in centimeters) can be read using the following methods:

You can easily change the unit of measure to inches by adjusting the getDistance() method. Instead of

return round(duration /29 / 2);

use

return round(duration /74 / 2);

Measuring distance in front and to the sides differs a bit. Since most of the time the sensor is looking forward, you can read actual front distance almost instantly. At the same time, to measure distances in all directions, you need to run a special scenario:

- Start the servo turn rightward.

- Wait until the turning is completed.

- Measure the rightward distance.

- Start the servo turn leftward.

- Wait until the turning is completed.

- Measure the leftward distance.

- Start the servo turn forward.

- Wait until the turning is completed.

- Measure the front distance.

We can't use delay() function in our code (as hundreds of the examples from the Internet do). This will kill the whole idea of the multitasking.

That's why the ultrasonic sensor class was created as a task-object. It receives the control from the main program regularly to process own scenarios step by step. These steps are often referred as "states". And the approach of implementing the task as a sequence of such steps is called a state machine.

To launch the distances measurement scenario (state machine) you must call querySideDistances() method. It looks like the following:

void RobotDistanceSensor::querySideDistances() { // start measuring from the front-right // set the state to "measuring Front-Right" dsState = dsMeasuringFR; // mark the old cached data as outdated lastFLDistance = -1; lastFRDistance = -1; // wait till servo finish turning // it will take either SERVO_DELAY // or 2*SERVO_DELAY ms depending // on the current servo position uint16_t servoDelay = (usServo.read()>F_POS) ? 2*SERVO_DELAY : SERVO_DELAY; // send command to the servo to start turning usServo.write(FR_POS); // schedule the next step after servo will finish the move scheduleTimedTask(servoDelay); }

Then, the sensor module moves through the states as shown below, measuring all the distances and waiting as needed for the servo to finish turning.

dsIdle, dsMeasuringFR, dsMeasuringFL, dsMeasuringFF - these are the states names which are used in the source code.

Method processTask() handles dragging the program through the scenario. The operating system calls this method regularly and gives it a chance do to required steps when an appropriate moment comes.

void RobotDistanceSensor::processTask() { // in the idle state we just do nothing if(dsState != dsIdle) { // check if it is time // to do something according to the scenario if (reachedDeadline()) { switch (dsState) { case dsIdle: // this block is rather rithual // IDE is getting nervous // if you missed any cases // in the switch operator break; case dsMeasuringFR: // the servo finished turning rightward // let's measure the distance // and then turn left lastFRDistance = getDistance(); usServo.write(FL_POS); // switching the state to // "measuring the leftward distance" dsState = dsMeasuringFL; // and let's wait for 300 ms // until the servo finish turning scheduleTimedTask(SERVO_DELAY); break; case dsMeasuringFL: // the servo finished turning leftward // let's measure the distance // and turn forward lastFLDistance = getDistance(); usServo.write(F_POS); // switching the state to // "measuring the front position" dsState = dsMeasuringFF; // and let's wait for 300 ms // until the servo finish turning scheduleTimedTask(SERVO_DELAY); break; case dsMeasuringFF: // the servo finished turning forward // measure the distance lastFDistance = getDistance(); // remember how old is our distances data lastFDistanceTimeStamp = millis(); // switch to the idle state dsState = dsIdle; break; } } } }

Once this scenario finishes (in 1200 milliseconds) - the measured distances are ready to be retrieved and used by the Artificial Intelligence module.

Artificial Intelligence

In the Artificial Intelligence module, all intelligence is fit into the method processTask().Again - we use a state machine here. At any time, in the AI mode, it can be in one of the following states:

- stateAI_GO - life is beautiful! No obstacles - FULL THROTTLE! But still, from time to time, robot checks if there are any obstacles in front. If the obstacle is detected and it is closer than 20 cm - stop and start thinking about where to go next. Request the ultrasonic sensor module to query the distances around (call querySideDistances()). Wait until the measures are ready, switch to the next state.

- stateAI_QueryDistances - processTask() activates in this state when the ultrasonic sensor finished measuring all the distances. The Artificial Intelligence takes a decision about the direction to turn to (depending on the measured distances). A command is sent to motors to start turning. AI waits for the motors to finish the turn and jumps to the next state.

- stateAI_Turning - motors finished turning. AI switches to the state stateAI_GO. Hopefully, in this state, we'll see no obstacles, and AI will give a command for the motors to move ahead.

Here is the piece of code which implements this behavior:

switch (currentAIState) { case stateAI_GO: { // get the distance in front int8_t distance = robotDistanceSensor->getFrontDistance(); // sometimes it is not available // in this case - wait for 300 ms and try again if(distance < 0) { scheduleTimedTask(300); } else { // is there an obstacle closer than 20 cm? if(distance < MIN_DISTANCE) { // stop the motors robotMotors->fullStop(); // beep with the frustrated voice robotVoice->queueSound(sndQuestion); // ask the sensor to look around robotDistanceSensor->querySideDistances(); // switch to the next state currentAIState = stateAI_QueryDistances; // wait 3 seconds scheduleTimedTask(3000); } else { // nothing in front - FULL AHEAD! robotMotors->driveForward(MOTOR_DRIVE_SPEED, 350); // recheck the distance // in 300 milliseconds scheduleTimedTask(300); } } break; } case stateAI_QueryDistances: { // at this moment, ulstrasonic sensor already finished // measuring front distances // let's load them to the local variables int8_t FLDistance = robotDistanceSensor->getLastFrontLeftDistance(); int8_t FRDistance = robotDistanceSensor->getLastFrontRightDistance(); if((FLDistance == -1) || (FRDistance ==-1)) { // it might happen that some distance was not measured for some reason // in this case it is better to request measuring them again robotDistanceSensor->querySideDistances(); scheduleTimedTask(3000); } else { // distances were measured successfully if(FLDistance == FRDistance) { // if there is the same space leftward and rightward - // choose the direction randomly if(millis() % 2 == 0) { robotMotors->turnRight(MOTOR_TURN_SPEED, MOTOR_TURN_DURATION); } else { robotMotors->turnLeft(MOTOR_TURN_SPEED, MOTOR_TURN_DURATION); } } else // turn to the direction where // more space was detected if(FLDistance < FRDistance) { robotMotors->turnRight(MOTOR_TURN_SPEED, MOTOR_TURN_DURATION); } else { robotMotors->turnLeft(MOTOR_TURN_SPEED, MOTOR_TURN_DURATION); } // switch to the next state currentAIState = stateAI_Turning; // setting different values in MOTOR_TURN_DURATION // you can change how long the robot turns scheduleTimedTask(MOTOR_TURN_DURATION); } break; } case stateAI_Turning: { // it is time to stop turning // we just jump into the initial state // and it will drive the robot forward // unless a new obstacle will be detected currentAIState = stateAI_GO; break; } }

The complete source code is available here: https://github.com/rmaryan/ardurobot/tree/ardurobot-1.2. Feel free to adapt it to your needs as you like.

Issues

In most of the cases, robot wanders around as expected. It can easily detect and avoid stand-alone obstacles:It even can navigate in the complicated environments:

Unfortunately, the number of the incorrect decisions is still too big. We can't let the robot wandering around in completely autonomous mode.

Major issues:

- The sensor is located too high from the floor. The robot can't see low-height obstacles. You can move the sensor down, of course. But it will harm the robot's charm index, also the caterpillar tracks will be obscuring the side vision for the sensor.

- Bad control over the situation in front. The width of the sensible area is much smaller than the robot's width. You can turn the robot's head all the time to detect all the obstacles in front, but this will be slow, noisy and impractical.

- Bad control over the situation backward. Since it is impossible to turn the head around, the robot can't see what's going on at the back. The only way to solve this is to add some extra sensors at the rear.

- Dead zone. If the robot somehow sneaked too close to the obstacle, sensor stops seeing it. If the tracks slide - you can't even use rotation sensors to detect that the robot is not moving anymore. So more sensors need to be placed in the front. Either some alternative distance sensors or mechanical touch sensors.

- Low precision and instability. These factors appeared to be very serious. Even if you handle all the false-positives in the program code, it is still impossible to detect reliably the sound-adsorbing obstacles. This is the physical limitation for this type of the sensor which can't be compensated programmatically.

- Small obstacles are missed. This includes even as big things as the table and chair legs. This is also a fundamental feature of the sensor, which can't be fixed.

A conclusion is the following. The ultrasonic distance sensor alone is not sufficient to reliably navigate in the room. If you really need doing something like this - consider installing a video camera on the robot and recognize images in real time. You can also fill the robot with lots of the distance sensors of different types (ultrasonic, infrared, touch), pointed in all directions. Having such sensors array, your robot will finally learn how to walk around and not to crash into things. This can also be a good starting point to build a robot which will do some more purposeful navigation.

We will keep exploring this further. Anyway - what is the purpose of our robot's life? Just to help the humans to learn more and change for the better.

No comments:

Post a Comment